适用于yolov5的数据集转换

from voc dataset to coco dataset

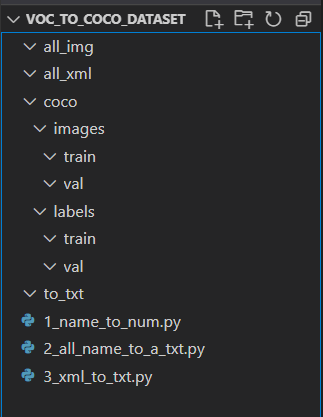

文件结构

1_name_to_num.py

1 | import os |

2_all_name_to_a_txt.py

1 | import os |

3_xml_to_txt.py

1 | # 导包 |

yolov5 demo

主要修改 3 个文件

- coco.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# COCO 2017 dataset http://cocodataset.org by Microsoft

# Example usage: python train.py --data coco.yaml

# parent

# ├── yolov5

# └── datasets

# └── coco ← downloads here (20.1 GB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

#path: ./dataset/coco # dataset root dir

train: ./dataset/coco/images/train # train images (relative to 'path') 118287 images

val: ./dataset/coco/images/val # val images (relative to 'path') 5000 images

#test: test-dev2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

# Classes

nc: 2 # number of classes

names: ['no_mask', 'mask'] # class names

# Download script/URL (optional)

#download: |

# from utils.general import download, Path

#

#

# # Download labels

# segments = False # segment or box labels

# dir = Path(yaml['path']) # dataset root dir

# url = 'https://github.com/ultralytics/yolov5/releases/download/v1.0/'

# urls = [url + ('coco2017labels-segments.zip' if segments else 'coco2017labels.zip')] # labels

# download(urls, dir=dir.parent)

#

# # Download data

# urls = ['http://images.cocodataset.org/zips/train2017.zip', # 19G, 118k images

# 'http://images.cocodataset.org/zips/val2017.zip', # 1G, 5k images

# 'http://images.cocodataset.org/zips/test2017.zip'] # 7G, 41k images (optional)

# download(urls, dir=dir / 'images', threads=3) - yolov5s.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 2 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

] - train.py 中的几个参数

1

2

3

4

5

6

7

8

9def parse_opt(known=False):

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='yolov5s.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='yolov5s.yaml', help='model.yaml path')

parser.add_argument('--data', type=str, default='coco.yaml', help='dataset.yaml path')

parser.add_argument('--hyp', type=str, default=ROOT / 'data/hyps/hyp.scratch-low.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300)

parser.add_argument('--batch-size', type=int, default=64, help='total batch size for all GPUs, -1 for autobatch')

parser.add_argument('--imgsz', '--img', '--img-size', type=int, default=640, help='train, val image size (pixels)')

All articles in this blog are licensed under CC BY-NC-SA 4.0 unless stating additionally.